Reading time approx. 5 minutes

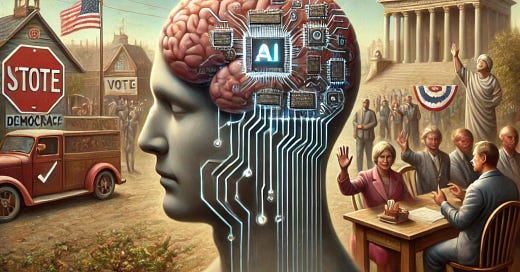

Good morning! Let’s dive into this Tuesday together because, as always, "It is perfectly possible to be both rational and wrong." Our Chief Behavioral Officer tool today: Yuval Noah Harari’s latest book Nexus and how our own biases are boosting the dangers around AI—and what we can do to flip that script.

How Our Biases Can Be a Path to Stronger Democracies

The other night, I curled up with Nexus: A Brief History of Information Networks from the Stone Age to AI, Harari’s deep dive into the history of information networks and the looming challenges AI brings to democracy. The book’s insights shook me because they highlight how AI is more than just a tool—it’s an "alien intelligence" that can outsmart and manipulate our own minds. Scary, right? But as much as Harari warns us about the dangers, I couldn't help but wonder: What if we could work around these limitations and actually use AI to strengthen democracy?

How does it work? Science, baby!

Let’s start with the idea that AI doesn't have to be a runaway train. Harari himself suggests that while the risks are real, they are not inevitable. Just as AI can be used to manipulate biases, it can also be designed to correct or counterbalance them. For example, while confirmation bias can lead to echo chambers, Harari envisions AI being programmed to actively introduce dissonance—exposing users to diverse, even challenging perspectives. Imagine a newsfeed where the algorithms are designed to offer balanced views, nudging us toward deeper critical thinking by showing content that is well-rounded rather than one-sided.

This concept ties into what Harari calls "designing for friction." In a world that increasingly favors convenience, AI could be built to slow us down—not by overwhelming us, but by encouraging us to pause and reflect before making decisions. Think of it like a well-intentioned speed bump in our fast-paced digital lives. AI platforms could prompt users to verify sources before sharing content or provide counter-arguments to viral posts, reminding us to question what we consume.

Harari also believes that AI could support deliberative democracy—a model where AI helps people engage in meaningful conversations about political issues, filtering through the noise to provide well-researched insights and ensuring that minority opinions aren’t drowned out by popular narratives. This way, AI could enhance public discourse rather than degrade it.

Why is this important?

So why is this approach so important? Because AI's potential to amplify democracy is just as powerful as its ability to undermine it. Harari emphasizes that, for all the fear surrounding AI, humans are still very much in control of the systems we build. By leveraging AI to create transparency, enhance deliberation, and challenge our biases, we can reclaim the narrative.

Take the availability heuristic—AI currently makes it easy to mistake the most recent or popular information for the most important. But with the right design, AI could be programmed to prioritize relevance and credibility over mere popularity. Harari suggests that instead of amplifying sensationalism, AI platforms could shift the focus to well-sourced, thoughtful content that encourages informed decision-making. Imagine an AI that helps citizens rank the importance of news based on global impact rather than on clickbait metrics.

Likewise, algorithmic transparency is critical. Harari insists that we can demand transparency in how AI curates information. If we understand why certain stories are being shown to us, we become more active participants in our own information diets, making it harder for AI to manipulate us.

Lastly, Harari believes in the power of pluralism. AI, instead of reinforcing groupthink, could become a tool for protecting diversity in political dialogue. Platforms could be designed to ensure that unpopular but important viewpoints aren’t lost in the shuffle. This would encourage a more inclusive, participatory form of democracy where everyone’s voice can be heard.

And now?

So, how can we take action today, with all this in mind? Here are a few ideas, grounded in Harari’s thinking, that can help us navigate the intersection of AI and democracy with optimism:

Advocate for friction in your digital spaces: Seek out platforms or tools that encourage reflection rather than impulsivity. When sharing content or forming an opinion, take a moment to question the source and consider alternative viewpoints.

Demand transparency from AI systems: Whether it’s on social media, search engines, or news apps, push for platforms that reveal how their algorithms work. You have the right to know why certain content is being shown to you and how your biases are being shaped.

Embrace diverse perspectives: Don’t just tolerate dissenting voices—actively seek them out. Harari believes in the importance of pluralism in democratic discourse. Use AI to expose yourself to a wide range of opinions, especially ones that challenge your preconceptions.

Support AI-driven civic engagement: Find ways to get involved in platforms that use AI to enhance deliberative democracy. Look for tools that simplify complex policies, encourage citizen participation, or help you engage with policy discussions in a meaningful way.

Bottom line

AI doesn’t have to be democracy’s downfall. If we’re thoughtful in how we design and use these technologies, AI can actually strengthen democratic systems by fostering transparency, encouraging critical thinking, and supporting inclusive public discourse.

Here’s your checklist to turn the AI-bias relationship in democracy’s favor:

Design for friction: Support platforms that encourage reflection before reaction.

Push for algorithmic transparency: Know how the AI systems you use influence your decisions.

Pluralism over groupthink: Use AI to broaden your horizons, not narrow them.

Participate in AI-enhanced civic engagement: Use AI to inform your participation in democracy, not just your consumption of information.

Chief Behavioral Officer Wanted

Where can you be a Chief Behavioral Officer this week? Start by asking how the platforms you engage with are shaping your political views. Are they reinforcing your biases or opening you up to a broader, more diverse conversation? And how can you use AI to enhance democracy in your daily life?

See you next Tuesday.

If you would like to send us any tips or feedback, please email us at redaktion@cbo.news. Thank you very much.

Der Wunsch nach Transparenz ist sehr verständlich, aber nicht so leicht zu erfüllen, vor allem bei komplexen Systemen. Solche KI-Modelle werden oft als “Blackboxen” bezeichnet, weil ihre internen Prozesse für Außenstehende nicht transparent sind. Selbst die Entwickler können manchmal nicht genau erklären, wie das Modell zu einer bestimmten Entscheidung gekommen ist. Oft wird ein zweites Programm "hinterhergeschickt", das begründete Vermutungen darüber anstellt, wie die ursprüngliche Entscheidung zustande gekommen sein könnte.

https://link.springer.com/chapter/10.1007/978-3-662-64408-9_4

Dieser Artikel von 2021 erklärt das gut. Sind wir mittlerweile weiter?